Hey there, tech enthusiasts! Ever feel like your system is slowing down and you’re not sure why? Well, maybe it’s time to talk about cache replacement policy optimization. Yes, it sounds fancy, but don’t worry—we’re going to break it down together. Think of this guide as your buddy walking you through the tech party where all these cool nerdy ideas hang out.

Read Now : Customizable Board Game Modules

We’ll start by chatting about the different kinds of cache replacement policies, elbowing our way through a sea of acronyms and dizzying algorithms. Hang tight; it’ll be both fun and educational—promise! Whether you’re running a sophisticated server setup or just trying to make your personal computer a little snappier, understanding how caching works can make a world of difference.

Each section in our guide is crafted to remove some mystery around optimizing your system’s cache decisions. From basic concepts to nitty-gritty optimization tactics, we’ve got you covered. So grab a cup of coffee, make yourself comfortable, and let’s dive into this cache replacement policy optimization guide together.

What Is Cache Replacement Policy?

Alright, let’s get into the nitty-gritty of cache replacement policies—the secret sauce behind how our devices speed things up, or sometimes, don’t. Imagine a cache like a little black book that your computer keeps; it stores frequently accessed data so your system doesn’t have to rummage through the entire memory bank every single time.

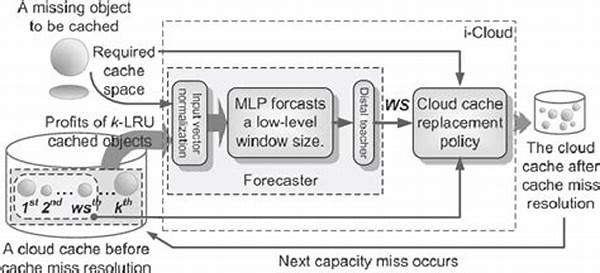

Now, the policy bit comes in when your cache—the book—runs out of pages. This is where the “replacement” part plays its part in our cache replacement policy optimization guide. Which old piece of data should we overwrite with new information? Choosing the wrong piece can slow your system down instead of speeding it up. That’s where different strategies or “policies” come in, like Least Recently Used (LRU), First In First Out (FIFO), and others.

Finding the right balance is key. It’s not only about freeing up space but also making sure the most crucial data is the quickest to access. With this, our cache replacement policy optimization guide is more of a treasure map, guiding you through the labyrinth of tech jargon to reach that sweet spot of optimal system performance. So, let’s plunge ahead with even more details!

Tactics for Cache Replacement Optimization

Loving the journey through our cache replacement policy optimization guide? Here are a few tidbits:

1. Choose Wisely: Your choice of policy—be it LRU, FIFO, or another cool acronym—affects performance.

2. Analyze Access Patterns: Knowing your system’s data access patterns can guide you to the right policy.

3. Monitor Performance: Keep an eye on cache hit and miss ratios to tweak and refine policy choices.

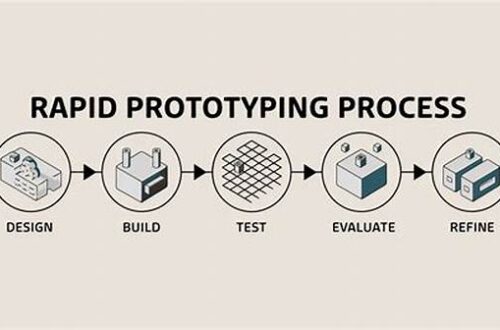

4. Experiment and Iterate: Don’t be afraid of trial and error—sometimes the pathway to speed is circuitous.

5. Stay Updated: As tech evolves and updates roll out, policies may need updating to keep pace with new standards.

Benefits of Optimizing Cache Policies

Are you wondering why becoming a pro in the cache replacement policy optimization guide is worth your time? Let’s unravel the goodies you get by optimizing. First, faster software loads. That’s right—no more endless spinning wheels while waiting for apps to open. Your device knows exactly where to grab the data it needs.

Another winner for you could be extended hardware life. Yes, by reducing the wear and tear on your hard disk drives (HDDs) or even solid-state drives (SSDs), especially in environments with frequent data requests. This means less time spent upgrading and more time enjoying silky-smooth performance—or, you know, whatever you like to do on a fast machine.

Let’s not forget the cost efficiency! Optimizing cache policies makes every bit of your computing resources work smarter and harder. Imagine getting more returns from your current infrastructure without shelling out extra cash for upgrades. It’s your ticket to optimizing current investments and adding a bit more zing without breaking the bank.

Understanding Policy Types

Have you decided to dig deeper into our trusty cache replacement policy optimization guide? Different policy types hold the key.

1. LRU (Least Recently Used): Prioritizes data that’s been accessed recently, disfavoring “forgotten” data.

2. FIFO (First In First Out): Think of it as an orderly queue where the oldest data gets the boot first.

Read Now : Cutting-edge Gaming Technology Integration

3. LFU (Least Frequently Used): Gives priority to data that’s accessed most frequently over a period of time.

4. Random Replacement: Removes random cache data without discrimination or favoritism.

5. MRU (Most Recently Used): Opposite of LRU; evicts the most recently used item.

6. Adaptive Policies: Makes intelligent guesses based on real-time data.

7. ARC (Adaptive Replacement Cache): Balances frequently used but recently used data.

8. SPLRU (Segmented LRU): It separates the cache into various segments, each with its policy.

9. LFUDA (Least Frequently Used with Dynamic Aging): Modifies the LFU approach for more nuance.

10. TLRU (Time Aware LRU): Adds a time dimension to the LRU strategy.

Real-World Implementation

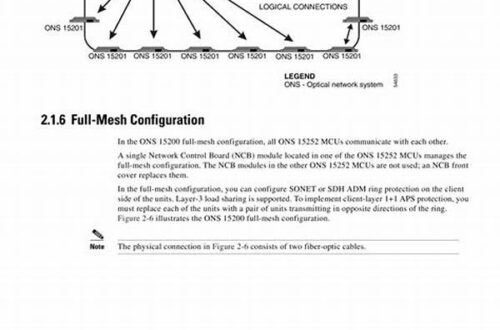

Diving into the real-world application of our cache replacement policy optimization guide, it’s not just about ideal theory. Many real-world systems, like web browsers and databases, already utilize cache policies. If your livelihood bridges alongside IT, understanding these can make you the workplace hero, optimizing servers or systems to run faster and more efficiently.

For developers or system administrators, implementing the right cache policy can make applications faster without significant changes to the codebase. Picture this—an improved user experience and a pat on your back from the boss, all while you sip on your favorite drink. From gaming environments that demand speed to online services that require stability, understanding these key principles can drastically elevate user satisfaction.

Finally, education institutions, small businesses, and casual users alike will find taking some time with our guide, beneficial. By understanding the right cache replacement policy for your needs, you can invigorate tired machines, and even save money. A little tweak here, another there, and boom!—magical performance that belies your device’s age without splurging on newer hardware.

Wrapping Up the Journey

So, there you have it—our cache replacement policy optimization guide has reached its final destination. Isn’t it interesting how such an intricate topic can affect the very essence of your system’s speed and efficiency? It’s like discovering the hidden forces that govern the tech universe, and now you hold the keys to that kingdom.

Beyond the acronyms and heavy tech speak, it’s all about making the tech work for you. Whether by implementing sophisticated policies in a booming IT environment or just wrangling your PC to behaving smoothly, the guide gives you stepping stones toward technological finesse.

Remember, though: It’s not a one-time fix. As you grow in tech know-how or as systems evolve, continually revisiting the cache policies can ensure optimum performance. Just like tech itself, it’s a journey—it doesn’t stop. So keep exploring, keep optimizing, and enjoy the benefits of a zippier, more responsive system!