Hey there, fellow tech enthusiast! Have you ever wondered how machines and computers can “see” and make sense of the world around them? It’s a fascinating topic that branches into a multitude of fields, from robotics to augmented reality. One particularly intriguing area is detecting interactions between objects. It’s all about understanding how objects interact within their environment—kind of like how a cup might interact with a table or how a cat might chase a laser pointer. It’s not just about recognizing objects but predicting and interpreting their movements and interactions.

Read Now : International Ip Arbitration Procedures

The Basics of Detecting Object Interactions

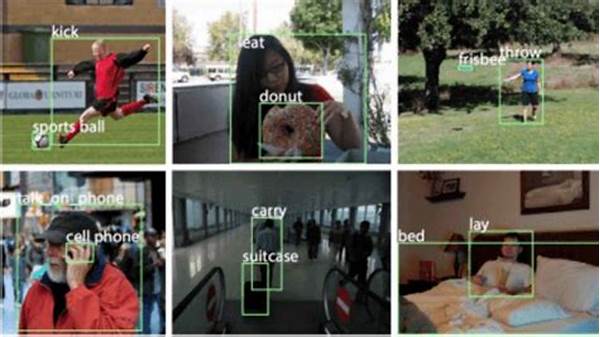

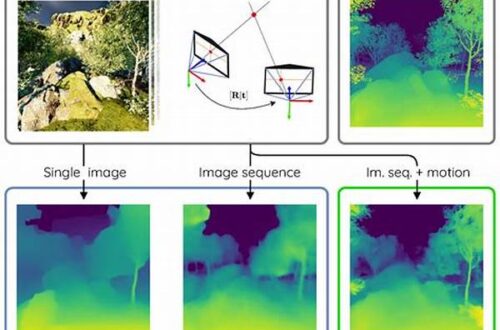

Detecting interactions between objects is not as simple as it might sound. Imagine watching an action movie and trying to predict what will explode next or what the hero will grab to swing across a chasm—it’s a bit like that. In the digital realm, this involves complex algorithms that analyze video data to understand relationships. The challenge is compounded by the vast diversity of objects and the variety of ways they can move. Technologies like machine learning and computer vision are at the forefront of these advancements, helping systems learn from data. They’re like digital detectives, piecing together clues to form a coherent picture of ongoing interactions. To put it simply, detecting interactions between objects is like teaching a computer to understand the story in a scene rather than just identifying characters and props.

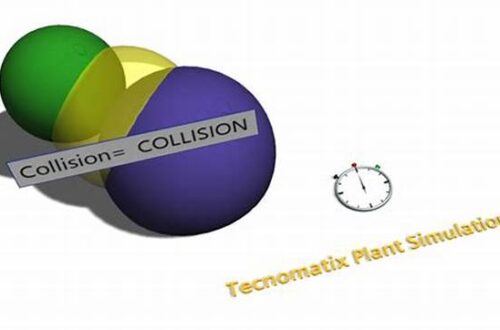

The process often begins with breaking down a continuous stream of data—like a video—into frames that can be analyzed individually. From there, each frame is examined for objects, which are then tracked over time. Aspects such as speed, trajectory, and proximity to other objects are key factors considered when determining interactions. The ultimate goal is a system that can accurately predict future interactions, potentially alerting a self-driving car of an impending collision or enabling a robotic assistant to lend a hand at the right moment. It’s a fascinating blend of technology striving to match human intuition.

Key Aspects of Detecting Interactions Between Objects

1. Understanding Context: Detecting interactions between objects requires the system to understand the context in which objects exist. It’s like knowing a knife and fork belong on the dinner table, not in the toolbox.

2. Time Sensitivity: Interactions are often time-based. Systems must dynamically analyze changes from one moment to the next, making detecting interactions between objects a fast-paced task.

3. Machine Learning Models: These are the backbone, enabling systems to learn from data patterns. By training on past interactions, they become better at predicting future ones.

4. Real-Time Analysis: Detecting interactions between objects in real-time is crucial for applications like autonomous vehicles or augmented reality, where delays could mean disaster.

5. Error Margins: There’s always room for error in detecting interactions between objects. Balancing precision and adaptability is key to improvements.

Tools and Technologies for Detecting Interactions Between Objects

When it comes to detecting interactions between objects, there are some nifty tools and technologies at the helm. Computer vision takes charge, allowing machines to visually interpret the world. Throw in some machine learning, and you’ve got systems that learn from mountains of data, making sense of objects and their interactions like seasoned pros. In the tech realm, convolutional neural networks (CNNs) are often the go-to for recognizing patterns in visual data. CNNs emulate human vision in a way, processing images in layers to detect finer details. It’s like teaching a computer to watch a game of soccer and understand not just the players but the evolving dynamics of the match.

One fascinating aspect is how these technologies converge with artificial intelligence. AI models can now predict possible interactions by evaluating past data, giving systems a predictive edge. This kind of foresight is incredibly beneficial in various applications, from improved user experiences in gaming to enhanced safety protocols in autonomous operations. Essentially, by detecting interactions between objects, we’re paving the way for smarter systems, capable of understanding the present and anticipating future moves.

Usability and Importance of Detecting Interactions Between Objects

1. Enhanced Safety: In vehicles, detecting interactions between objects is key to preventing collisions and ensuring passenger safety.

2. Augmented Reality: Helps mix virtual and real-world elements, promoting interactive experiences.

3. Robotics: Promotes autonomy in robots, allowing them to interact seamlessly with their environment.

4. Gaming: Offers more immersive and interactive experiences by understanding player actions.

5. Surveillance: Identifies unusual activities by monitoring interactions, boosting security measures.

Read Now : Player Engagement With Game Puzzles

6. Predictive Maintenance: Analyzes equipment interactions to predict faults before they occur.

7. Smart Homes: Interprets resident behaviors to automate home functions.

8. Healthcare: Monitors patient movements, offering insights into their interaction with medical equipment.

9. Retail: Analyzes customer interactions with products, refining marketing approaches.

10. Manufacturing: Optimizes workflows by detecting inefficiencies in human and machine interaction.

Real-World Applications of Detecting Interactions Between Objects

Detecting interactions between objects isn’t just an academic exercise; it finds its way into our everyday tech more often than you might think! Let’s talk about self-driving cars first. These vehicles are exemplars of how detecting interactions between objects is crucial—they need to distinguish between a pedestrian about to step off the curb and one standing still. It’s the difference between a smooth drive and an accident. Similarly, smart home devices often use these technologies to understand human motion, allowing for personalized and efficient home automation. You’d be surprised at how your home assistant seems to know exactly when to suggest certain tasks or reminders—it’s not magic, it’s technology at work.

Now, stepping into the realm of entertainment, video games present an exciting landscape for these tech advances. Detecting interactions between objects allows for highly immersive environments, where the game reacts to your every move with astonishing accuracy. In manufacturing, this tech is a game changer. Imagine a system that can distinguish between different materials on a conveyor belt, sorting them without human intervention. That’s efficiency and innovation wrapped into one! The potential beyond these examples is vast, stretching into anything from agriculture, where machines identify and sort produce, to healthcare, monitoring patient-robot interactions.

Challenges in Detecting Interactions Between Objects

Sure, detecting interactions between objects is cool, but it doesn’t come without its fair share of challenges. For starters, think about the sheer volume of data these systems need to process—it’s massive! You need robust systems to handle this data without crashing. Then, there’s the accuracy issue. Detecting interactions accurately is vital, and getting to that point requires meticulous programming and training. The myriad possibilities of object interactions can throw a wrench in even the most carefully crafted scenario-based systems. It takes continuous learning from immense datasets, refining algorithms time and again.

Another conundrum is bias. Data-driven systems inherently reflect the biases in the data they’re trained on. So, ensuring an unbiased approach in detecting interactions between objects is an ongoing challenge. Imagine a system trained mostly on urban traffic patterns; it might struggle in rural settings—a disaster for self-driving cars. Lastly, integrating these systems into existing technologies can be a major hurdle, often requiring a complete overhaul rather than a simple upgrade. But, each of these challenges is being tackled head-on by researchers and developers. As they find solutions, the field only gets more thrilling, promising even bigger breakthroughs in the future.

Summary of Detecting Interactions Between Objects

So, there you have it—a whirlwind tour through the world of detecting interactions between objects, and let me tell you, it’s an adventure! These technologies are absolutely fascinating! They’re not just about identifying a chair or dog in a picture but about understanding how a cat might bump into that chair or leap over that dog. It’s like giving machines a form of intuition, enabling them to anticipate and react to changes in real-time. This tech crossover into various fields, like transportation, gaming, healthcare, and even entertainment, is opening new doors and challenging our previous limits.

As we develop technologies for detecting interactions between objects, we’re paving the way for safer cars, smarter homes, and incredibly immersive digital experiences. Of course, the journey isn’t free of hurdles—data handling, accuracy, and bias remain significant challenges, but each step forward offers promising solutions. Innovations in artificial intelligence and machine learning guide the way, empowering these systems to become ever-cleverer detectives of their environments. So, whether you’re a tech enthusiast or a curious observer, it’s an exciting time to see—or rather, detect—where this journey will lead us next!