Hey there, tech enthusiasts! Are you curious about how your computer keeps running smoothly even while multitasking like a pro? Well, that’s where cache memory management strategies come into play! In this blog post, we’re diving deep into the world of cache memory – that secret sauce that helps your device manage tasks efficiently. Sit back, grab a coffee, and let’s explore these fascinating strategies together.

Read Now : Introduction To Game Programming Basics

Why Cache Memory Management Strategies Matter

So, why do cache memory management strategies really matter? Imagine your computer is trying to juggle multiple applications at once. Without a good strategy, it would be like trying to carry water in a bucket full of holes. Cache memory acts like a high-speed access point, storing your most-used data so your device can work faster than a barista on a Monday morning rush. By managing this cache smartly, we ensure that your computer spends less time fetching data and more time getting work done. It’s like having a super-organized filing cabinet at arm’s reach – saves time, boosts productivity, and keeps frustration at bay! Understanding these strategies is crucial, especially if you’re keen on maximizing your system’s performance or developing applications. Whether you’re a tech newbie or a seasoned pro, grasping the importance of effective cache management is vital in today’s digital age.

Common Cache Management Techniques

Cache memory management strategies are like your grandma’s secret recipe – everyone has a version, and they swear by it. Here are five common ones:

1. LRU (Least Recently Used): Think of it like cleaning out your fridge – you toss out the old stuff first.

2. FIFO (First In, First Out): Similar to standing in line at Starbucks – first come, first served.

3. LFU (Least Frequently Used): Like your gym membership – if you don’t use it, you lose it.

4. Random Replacement: It’s like lottery tickets – sometimes it works, and other times, not so much.

5. Adaptive Replacement: The best of all worlds, adjusting itself based on usage patterns like a tech-savvy chameleon.

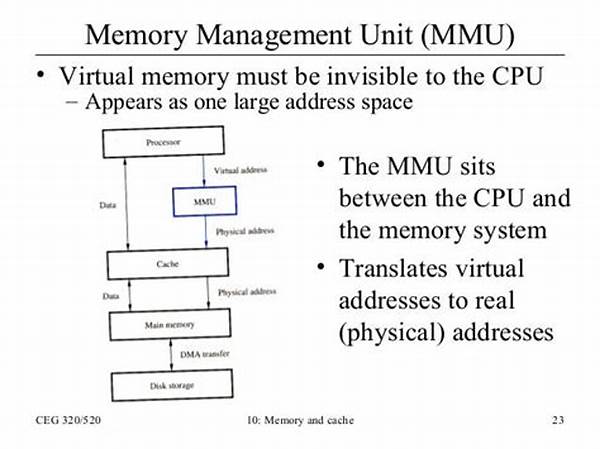

The Role of Hardware in Cache Memory Management

Let’s chat about how hardware plays a role in cache memory management strategies. Your device’s hardware acts like an orchestra conductor, making sure all parts perform harmoniously. Cache memory resides close to the CPU, shaving precious seconds off data retrieval times. This proximity ensures anything your processor needs quickly is right there, waiting like a well-organized desktop. Effective hardware strategies reduce latency and increase processing speed, making the device capable of handling more complex tasks effortlessly. This synergy between hardware and memory management strategies forms the backbone of an efficient computing experience, whether you’re editing a video or playing the latest game. Hardware designers strive to optimize these strategies continuously, ensuring they align with advancements in software and processing demands.

Different Approaches to Cache Memory Management

When it comes to cache memory management strategies, there are numerous approaches developers take to optimize performance:

1. Write-Through Caching: Ensures data consistency by updating main memory simultaneously.

2. Write-Back Caching: Allows faster processing by deferring memory updates until absolutely necessary.

3. Hierarchical Caching: Organizes cache into multiple levels to maximize efficiency.

Read Now : Diy Hitbox Setup Tips And Tricks

4. Distributed Caching: Spreads cache data across multiple systems to enhance scalability.

5. Cache Partitioning: Divides cache into segments for specific applications or data types.

6. Dynamic Caching: Adjusts based on real-time needs and data access patterns.

7. Content-Aware Caching: Tailors data storage based on content type and usage.

8. Predictive Caching: Uses algorithms to predict and pre-emptively load data.

9. Cache Compression: Reduces the data size to fit more into available cache space.

10. Circular Buffering: Manages data flow in a cyclical manner to prevent overflow.

How Software Utilizes Cache Memory Management Strategies

Ever wondered how software taps into cache memory management strategies to boost performance? Well, it’s like having a culinary sous-chef by your side! Software uses algorithms to determine which pieces of data are ‘hotcakes’ – frequently accessed and hence, cached. This ensures your system’s resource utilization is top-notch, drastically cutting down waiting times. Imagine opening your favorite app, and it’s ready to roll instantly; that’s cache at work. These strategies are crucial in accelerating load times, reducing power consumption, and improving overall user satisfaction. For instance, when you stream a YouTube video without pesky buffering delays, or when your favorite game loads seamlessly, it’s these strategies doing the heavy lifting behind the scenes. Developers often refine these strategies to create software that’s not only fast and responsive but also efficient in handling system resources.

Modern Challenges in Cache Memory Management

Today’s digital ecosystem brings forward modern challenges in cache memory management strategies. With the surge in cloud computing and IoT devices, managing cache efficiently has become more complex than ever. Devices have to handle larger datasets, and maintaining an agile cache is crucial to prevent bottlenecks. As technology progresses, there’s a continuous race to develop strategies that are flexible and scalable. One of the significant challenges is ensuring that the cache systems are secure against cyber threats while maintaining speed and accuracy. Additionally, integrating new technologies like AI and machine learning poses its own set of challenges, requiring innovative solutions to keep things running smoothly. The increasing demand for personalized applications also requires adaptive cache strategies that can learn and adjust in real time. It’s a dynamic field that continues to evolve, pushing the boundaries of how we understand and utilize cache memory.

Wrapping Up Cache Memory Management Strategies

In summary, mastering cache memory management strategies is like having a toolkit that optimizes your digital experience. Whether it’s the hardware or software side of things, these strategies shape the efficiency and speed of your devices. From FIFO to LRU, each strategy offers unique benefits tailored to different applications. As technology advances, so do our methods of managing cache, ensuring systems remain agile and responsive. With challenges like larger datasets and security concerns, continuous innovation is needed in this area. Grasping these concepts not only improves your technical acumen but also empowers you to make informed decisions in the digital landscape. Whether you’re a developer, IT professional, or just a curious digital nomad, understanding these strategies can be a game-changer for your tech toolbox.