Hey there, fellow tech enthusiasts! If you’re anything like me, you’ve probably spent countless hours tinkering with your computer, trying to make it faster and more efficient. Well, today, let’s dive into an intriguing concept that might just make you see your machine in a whole new light: the time-space trade-off in computing. Basically, it’s like a juggling act between how fast our programs can run and how much memory they need. Cool, right? Grab your coffee, and let’s jump in!

Read Now : Finite Element Analysis Methods

Understanding the Time-Space Trade-off

Alright, so let’s break it down. The time-space trade-off in computing is like choosing between speed and storage. Imagine you have a choice: either keep your programs lean and mean but slower, or let them bulk up in terms of memory to make them run faster. It’s like deciding whether to carry a small backpack with essentials only or lug around a gigantic suitcase with everything you possibly need. In computing, this trade-off is all about optimizing the resources you have—balancing the execution time of a program against the memory it consumes.

This concept plays a pivotal role in algorithm design. Picture this: you’re working on sorting a massive dataset. You can either keep using minimal memory, which might take a while, or you ramp up the memory usage to speed things up. Programmers often have to make these calls, because while memory keeps getting cheaper, speed is often a primary concern. That’s the heart of the time-space trade-off in computing—finding that sweet spot where your program runs efficiently without gobbling up all available resources. It’s all about being smart with the resources at your disposal.

Real-world Examples of Time-Space Trade-off

1. Compression Algorithms: Think about ZIP files. They reduce file sizes to save disk space, but decompressing them can take time. This is a perfect example of the time-space trade-off in computing.

2. Caching Strategies: Web browsers save data to load pages faster. More cached data means quicker load times but more storage space used. Time and space—it’s a delicate dance!

3. Database Indexes: They make retrieval swift by occupying extra memory space. It’s a classic trade-off where querying speeds up at the cost of space.

4. Virtual Memory Management: Operating systems manage memory usage by swapping data between RAM and disk. It’s space vs. speed in action right on your computer.

5. Data Structures: Choosing between different structures like arrays or linked lists can impact how much space is used and how fast operations are, showcasing another facet of the time-space trade-off in computing.

Why It Matters in Modern Computing

In today’s world of sophisticated computing, the time-space trade-off is more important than ever. With big data and the rise of artificial intelligence, our systems are pushed to their limits. Developers need to make calculated decisions on whether to save on time or space based on the specific requirements of the task at hand. It’s a bit like puzzle-solving: put the pieces together just right, and get a streamlined, efficient solution.

Think about cloud services where time is money—literally. These services need to process vast amounts of data quickly, and here, the time-space trade-off in computing takes center stage. Striking the right balance can lead to better performance and cost savings for companies. As technology advances, managing this trade-off effectively becomes crucial to staying ahead in the tech race. So, remember, it’s not just about having powerful tech; it’s about wielding it wisely to get the best outcomes.

Applying the Time-Space Trade-off Concept

The time-space trade-off in computing isn’t just a theoretical curiosity; it’s a practical choice that software developers and engineers face every day. When developing software, there’s often a decision to be made: should more resources be invested into faster CPU cycles or more memory nodes? Answering this requires an understanding of the application’s specific context and requirements.

1. Performance Goals: Determine what’s more critical—speed or memory footprint.

Read Now : Unlocking Potential In Game Design

2. Resource Availability: Consider the hardware capabilities.

3. User Experience: Faster apps can enhance the user experience despite using more memory.

4. Cost Implications: More memory can be costly, so budget is important.

5. Application Type: Some applications require speed (e.g., gaming), while others need to be frugal with space (e.g., IoT devices).

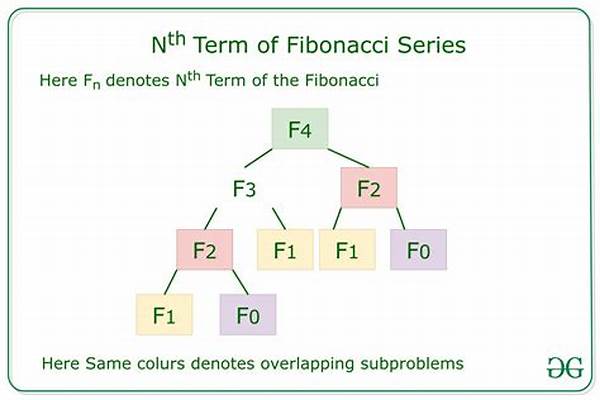

Time-Space Trade-off in Programming

When coding, I’m sure many of us have scratched our heads over whether to optimize for speed or memory usage. This trade-off is where creativity meets logic. Algorithms are the bridge; they help us decide which side of the scale to tip towards. In programming, this trade-off often involves choosing the right algorithm or data structure, ensuring that the program runs efficiently while managing resources wisely.

Let’s say you’re handling graphics processing, where speed reigns supreme, you’d likely favor an algorithm that minimizes time, even at the expense of higher memory usage. Conversely, for embedded systems, where space is at a premium, you might go for algorithms that are more space-efficient, even if they slow down processing time. Programming involves constantly making these decisions, like a chef balancing flavors in a dish—everything needs to be just right for perfection.

Wrapping Up the Time-Space Trade-off

To wrap things up, the time-space trade-off in computing is like deciding what to take on your next big adventure—pack light and possibly miss something, or pack heavy and be prepared for anything. It’s a compromise, a calculated one, and it’s what makes the field of computing both challenging and exciting. No matter how advanced technology becomes, this balancing act will always play a crucial role.

Understanding this trade-off can empower developers and engineers to make smart choices, ensuring that our tech world keeps running smoothly and efficiently. So next time you’re coding or just marveling at how fast your apps run, give a nod to this humble yet powerful concept. It’s the invisible hand steering countless innovations and breakthroughs in our digital era, proof that sometimes less really can be more.